And Why Single-Use LLMs Fall Short in Delivering Tailored Business Insights

In today's data-driven business landscape, tailored insights play a pivotal role in driving strategic decision-making, fostering innovation, and gaining a competitive edge. As organizations grapple with an ever-increasing volume of data, the ability to extract precise, contextualized insights becomes paramount. Large Language Models (LLMs) have emerged as powerful tools for data analysis, customer segmentation, and predictive analytics, offering the potential to unlock valuable business insights. However, the limitations of single-use LLMs often hinder their ability to deliver truly tailored and accurate insights, necessitating a more nuanced approach.

Key Takeaway Summary:

- Single-use LLMs exhibit inconsistent performance across different tasks due to a lack of explicit task-specific training.

- LLMs can misinterpret context and produce hallucination errors (incorrect or fabricated information) due to their inability to verify facts.

- Retrieval-Augmented Generation (RAG) approaches, such as RAG-Sequence and RAG-Token, aim to enhance LLM performance by integrating external knowledge sources.

- Optimizing website performance for Core Web Vitals is crucial for improving eCommerce conversions and user experience.

- Addressing hallucination errors and integrating LLMs with human oversight and verification can help mitigate their limitations and deliver more accurate and tailored business insights.

Limitations of Single-Use LLMs

Emergent Capabilities and Task-Specific Performance

One of the fundamental challenges with single-use LLMs lies in their emergent capabilities and uneven performance across different tasks within the same domain. These models are not explicitly trained for specific tasks, leading to inconsistent and sometimes unpredictable results. While LLMs may excel in certain areas, they can falter in others, exhibiting a jittery and unreliable performance.

For instance, an LLM trained on a broad corpus of text may excel at generating coherent and fluent language, but it may struggle with more specialized tasks, such as interpreting financial data or analyzing technical specifications. This lack of explicit task-specific training can result in suboptimal insights, hindering the ability of businesses to make informed decisions based on the LLM's output.

Inability to Understand Context and Verify Facts

Another significant limitation of single-use LLMs is their inability to understand context and verify facts. While LLMs can generate human-like text based on the patterns they have learned from their training data, they often lack a deep understanding of the underlying context and factual accuracy of the information they produce.

This limitation can lead to contextual misunderstandings, where the LLM fails to grasp the nuances of a particular situation or domain, resulting in insights that are misaligned with the business's needs. Additionally, LLMs are prone to hallucination errors, where they generate incorrect or fabricated information, as they lack the ability to cross-reference and verify facts against external sources.

In a business setting, where accurate and reliable insights are crucial for decision-making, these limitations can have severe consequences, potentially leading to costly mistakes or missed opportunities.

Retrieval-Augmented Generation (RAG)

To address the limitations of single-use LLMs, researchers and practitioners have explored various approaches, one of which is Retrieval-Augmented Generation (RAG). RAG aims to enhance the performance of LLMs by integrating external knowledge sources during the generation process.

Overview of RAG Approaches

There are two main RAG approaches: RAG-Sequence and RAG-Token. In the RAG-Sequence approach, external documents are retrieved and then used to generate answers. This method is often more cost-effective and has been widely adopted in various applications, such as customer service and content creation.

On the other hand, the RAG-Token approach takes a more granular approach by integrating retrieval at the token level. While this method can potentially increase accuracy, it comes at a higher computational cost and may not be feasible for all use cases.

Industry Applications and Challenges

RAG approaches have found applications in various industries, including customer service, content creation, and knowledge management. For example, in customer service, RAG-powered LLMs can retrieve relevant information from knowledge bases or product manuals to provide more accurate and tailored responses to customer inquiries.

However, the implementation of RAG approaches is not without challenges. One significant challenge is the increased computational resources required, particularly for the RAG-Token approach. Additionally, integrating external data sources and ensuring their relevance and timeliness can be a complex task, requiring careful data management and maintenance.

Overcoming Challenges

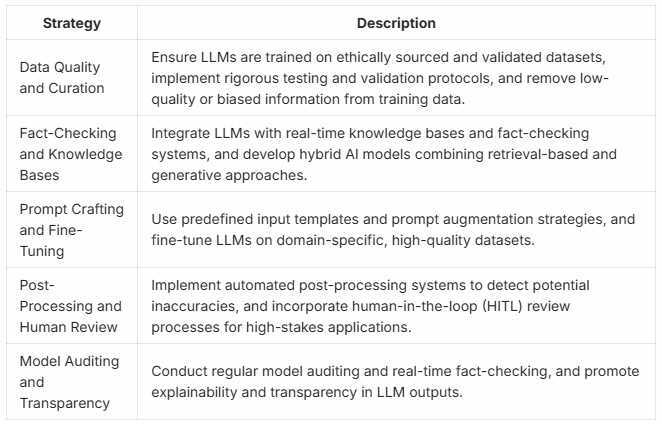

While single-use LLMs and their limitations present challenges in delivering tailored business insights, there are strategies and approaches that can help mitigate these issues and unlock the full potential of these powerful language models.

Identifying and Addressing Hallucination Errors

One of the critical steps in overcoming the limitations of single-use LLMs is identifying and addressing hallucination errors. These errors occur when LLMs generate incorrect or fabricated information due to their inability to understand context and verify facts.

To detect hallucination errors, businesses can implement error detection techniques, such as user feedback loops and cross-referencing LLM outputs with verified data sources. By actively monitoring and identifying these errors, organizations can take corrective measures and refine their LLM models for improved accuracy.

Additionally, mitigation strategies like integrating LLM outputs with rule-based systems or human oversight can help minimize the impact of hallucination errors. By combining the strengths of LLMs with the domain expertise and fact-checking capabilities of human experts, businesses can ensure that the insights generated are accurate, contextualized, and tailored to their specific needs.

Integrating LLMs with Human Oversight and Verification

To fully leverage the power of LLMs while addressing their limitations, businesses are increasingly exploring human-in-the-loop systems. These systems combine the natural language processing capabilities of LLMs with the contextual understanding and fact-checking abilities of human experts.

In a human-in-the-loop system, LLMs generate initial insights or outputs, which are then reviewed and verified by human experts. This collaborative approach ensures that the insights are accurate, contextualized, and tailored to the specific business needs. Human experts can provide feedback, make corrections, and guide the LLM towards more relevant and reliable outputs.

Successful implementations of human-in-the-loop systems have been observed in various business sectors, such as financial analysis, legal research, and content creation. For example, in the legal domain, LLMs can assist in identifying relevant case law and generating initial drafts of legal documents, which are then reviewed and refined by human lawyers to ensure accuracy and compliance.

By integrating LLMs with human oversight and verification, businesses can leverage the strengths of both artificial and human intelligence, delivering tailored and reliable business insights that drive strategic decision-making and foster a competitive advantage.

Conclusion

In the rapidly evolving business landscape, where data-driven insights are the key to success, the limitations of single-use LLMs present significant challenges. However, by understanding these limitations and exploring innovative approaches like Retrieval-Augmented Generation (RAG), and integrating LLMs with human oversight and verification, businesses can unlock the true potential of these powerful language models.

The need for tailored business insights will only continue to grow as organizations strive to gain a competitive edge and make informed decisions. By addressing the challenges posed by single-use LLMs and embracing a more nuanced approach, businesses can leverage the strengths of both artificial and human intelligence, delivering accurate, contextualized, and tailored insights that drive strategic decision-making and foster innovation.

As the field of natural language processing and language models continues to advance, we can expect to see further developments in task-specific training, improved contextual understanding, and enhanced fact-checking mechanisms. These advancements will pave the way for more sophisticated and reliable LLM-powered solutions, enabling businesses to extract even more value from their data and gain a deeper understanding of their customers, markets, and competitive landscapes.

Ultimately, the journey towards delivering truly tailored business insights is an ongoing one, requiring a collaborative effort between human experts and advanced language models. By embracing this synergy and continuously refining their approaches, businesses can unlock new opportunities, drive innovation, and stay ahead in an ever-changing and data-driven world.

Lear more about how the use of multiple LLM systems can create better outputs for a businesses needs!